Moltbot(原名 Clawdbot) 是 2026 年 1 月突然爆火的开源个人 AI 助手项目,是一个把 本地算力 + 大模型 Agent 自动化玩到极致的开发者效率工具。可以理解为 “豆包AI手机” 。

⏱️ 预计部署时间:20-25分钟

不建议在常用的电脑上部署,可能会搞坏系统

官网:https://openclaw.ai

已改名:Openclaw!

1. 安装openclaw

安装极其简单,只需1行命令

curl -fsSL https://openclaw.ai/install.sh | bash

$ curl -fsSL https://openclaw.ai/install.sh | bash

🦞 OpenClaw Installer

Give me a workspace and I'll give you fewer tabs, fewer toggles, and more oxygen.

✓ Detected: linux

✓ Node.js v22.22.0 found

✓ Git already installed

→ Installing OpenClaw 2026.1.30...

→ npm install failed; cleaning up and retrying...

发现安装失败了,看了github于是选择使用 node安装。我的node版本为:v22.22.0

命令:npm install -g openclaw@latest

发现缺少了cmake导致了安装失败

$ npm install -g openclaw@latest

npm warn deprecated npmlog@6.0.2: This package is no longer supported.

npm warn deprecated are-we-there-yet@3.0.1: This package is no longer supported.

npm warn deprecated gauge@4.0.4: This package is no longer supported.

npm warn deprecated node-domexception@1.0.0: Use your platform's native DOMException instead

npm warn deprecated tar@6.2.1: Old versions of tar are not supported, and contain widely publicized security vulnerabilities, which have been fixed in the current version. Please update. Support for old versions may be purchased (at exhorbitant rates) by contacting i@izs.me

npm error code 1

npm error path /data00/home/wangdefeng.1/.npm-global/lib/node_modules/openclaw/node_modules/node-llama-cpp

npm error command failed

npm error command sh -c node ./dist/cli/cli.js postinstall

npm error [node-llama-cpp] Cloning llama.cpp

npm error ^[[?25l[node-llama-cpp] Cloning ggml-org/llama.cpp (local bundle) 0%

npm error ^[[2K^[[1A^[[2K^[[G[node-llama-cpp] Cloning ggml-org/llama.cpp (local bundle) 0%

npm error ^[[2K^[[1A^[[2K^[[G[node-llama-cpp] Cloning ggml-org/llama.cpp (local bundle) 1%

npm error ^[[2K^[[1A^[[2K^[[G[node-llama-cpp] Cloning ggml-org/llama.cpp (local bundle)

npm error 14%

npm error ^[[2K^[[1A^[[2K^[[1A^[[2K^[[G[node-llama-cpp] Cloning ggml-org/llama.cpp (local bundle)

npm error 16%

npm error ^[[2K^[[1A^[[2K^[[1A^[[2K^[[G[node-llama-cpp] ✔ Cloned ggml-org/llama.cpp (local bundle)

npm error ^[[?25h[node-llama-cpp] ◷ Downloading cmake

npm error [node-llama-cpp] ✖ Failed to download cmake

npm error [node-llama-cpp] To install "cmake", you can run "sudo apt update && sudo apt install -y cmake"

npm error [node-llama-cpp] The prebuilt binary for platform "linux" "x64" is not compatible with the current system, falling back to building from source

npm error ^[[?25l^[[?25hsh: 1: xpm: not found

npm error [node-llama-cpp] Failed to build llama.cpp with no GPU support. Error: Error: cmake not found

npm error at getCmakePath (file:///data00/home/wangdefeng.1/.npm-global/lib/node_modules/openclaw/node_modules/node-llama-cpp/dist/utils/cmake.js:52:11)

npm error at async compileLlamaCpp (file:///data00/home/wangdefeng.1/.npm-global/lib/node_modules/openclaw/node_modules/node-llama-cpp/dist/bindings/utils/compileLLamaCpp.js:167:66)

npm error at async buildAndLoadLlamaBinary (file:///data00/home/wangdefeng.1/.npm-global/lib/node_modules/openclaw/node_modules/node-llama-cpp/dist/bindings/getLlama.js:422:5)

npm error at async getLlamaForOptions (file:///data00/home/wangdefeng.1/.npm-global/lib/node_modules/openclaw/node_modules/node-llama-cpp/dist/bindings/getLlama.js:266:21)

npm error at async Object.handler (file:///data00/home/wangdefeng.1/.npm-global/lib/node_modules/openclaw/node_modules/node-llama-cpp/dist/cli/commands/OnPostInstallCommand.js:22:13)

npm error Error: cmake not found

npm error at getCmakePath (file:///data00/home/wangdefeng.1/.npm-global/lib/node_modules/openclaw/node_modules/node-llama-cpp/dist/utils/cmake.js:52:11)

npm error at async compileLlamaCpp (file:///data00/home/wangdefeng.1/.npm-global/lib/node_modules/openclaw/node_modules/node-llama-cpp/dist/bindings/utils/compileLLamaCpp.js:167:66)

npm error at async buildAndLoadLlamaBinary (file:///data00/home/wangdefeng.1/.npm-global/lib/node_modules/openclaw/node_modules/node-llama-cpp/dist/bindings/getLlama.js:422:5)

npm error at async getLlamaForOptions (file:///data00/home/wangdefeng.1/.npm-global/lib/node_modules/openclaw/node_modules/node-llama-cpp/dist/bindings/getLlama.js:266:21)

npm error at async Object.handler (file:///data00/home/wangdefeng.1/.npm-global/lib/node_modules/openclaw/node_modules/node-llama-cpp/dist/cli/commands/OnPostInstallCommand.js:22:13)

npm error A complete log of this run can be found in: /home/wangdefeng.1/.npm/_logs/2026-01-31T16_37_16_228Z-debug-0.log

2. 安装cmake

# 安装

# Ubuntu/Debian系统:

sudo apt update

sudo apt install -y cmake build-essential ninja-build

# CentOS/RHEL系统:

sudo yum install -y cmake gcc gcc-c++ make

sudo yum install -y ninja-build # 如仓库提供

# 检查是否安装完成d

node -v

which cmake && cmake --version

which gcc && gcc --version

which g++ && g++ --version

which ninja && ninja --version

# 重新安装

npm install -g openclaw@latest

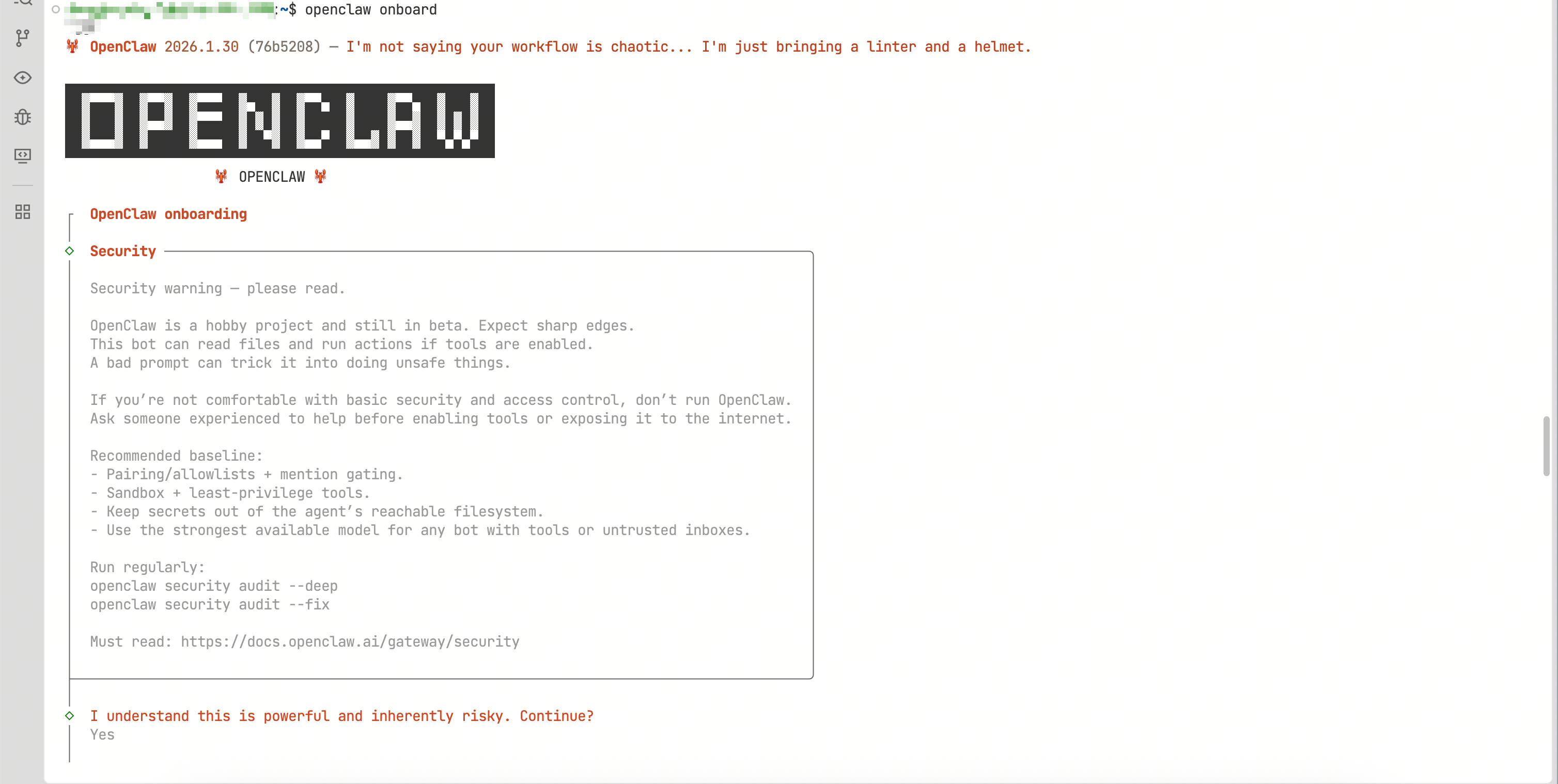

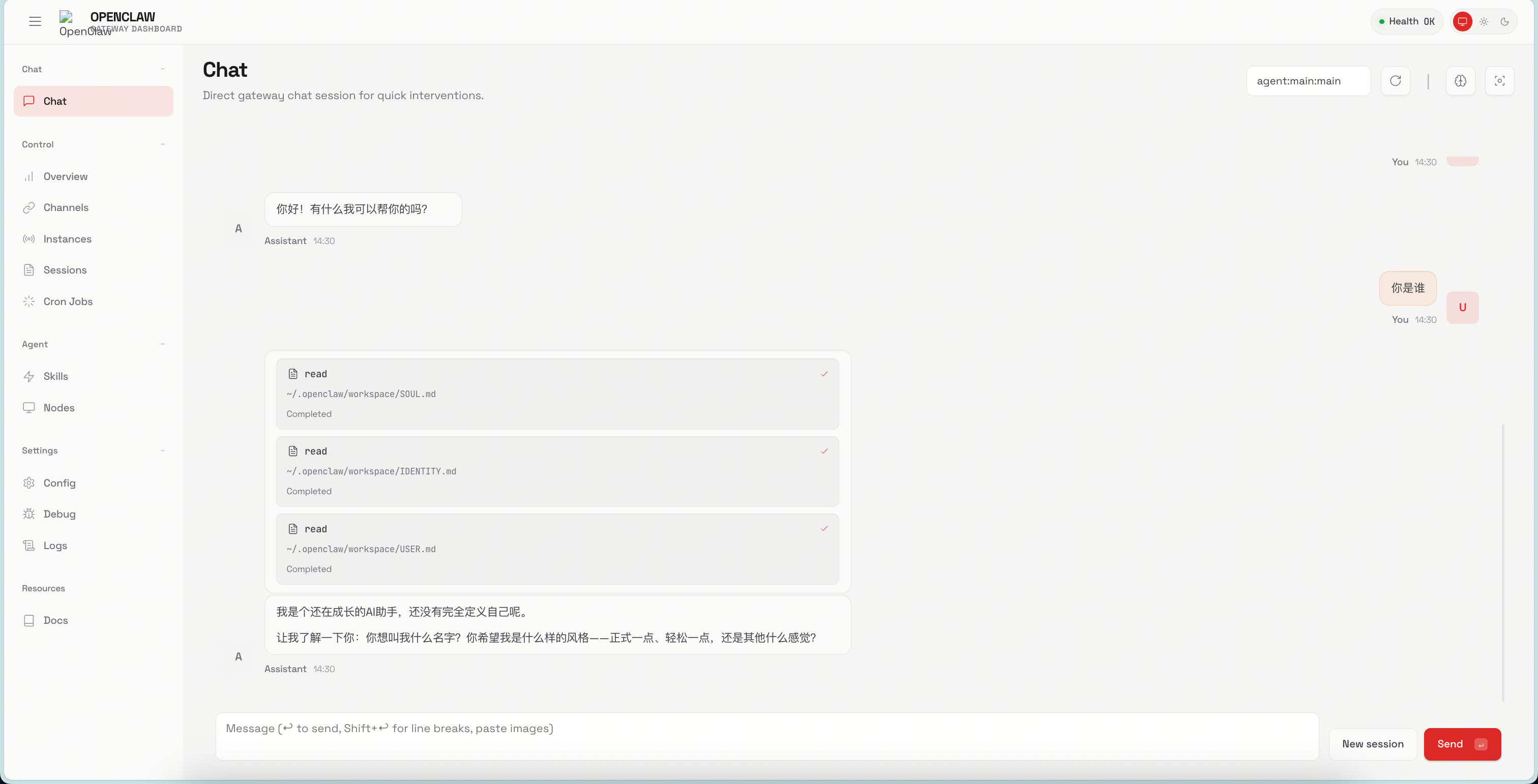

3. 初始化 + 启动openclaw

# 初始化

openclaw onboard

# 开启自动补全 追加到~/.bashrc

source <(openclaw completion --shell bash)

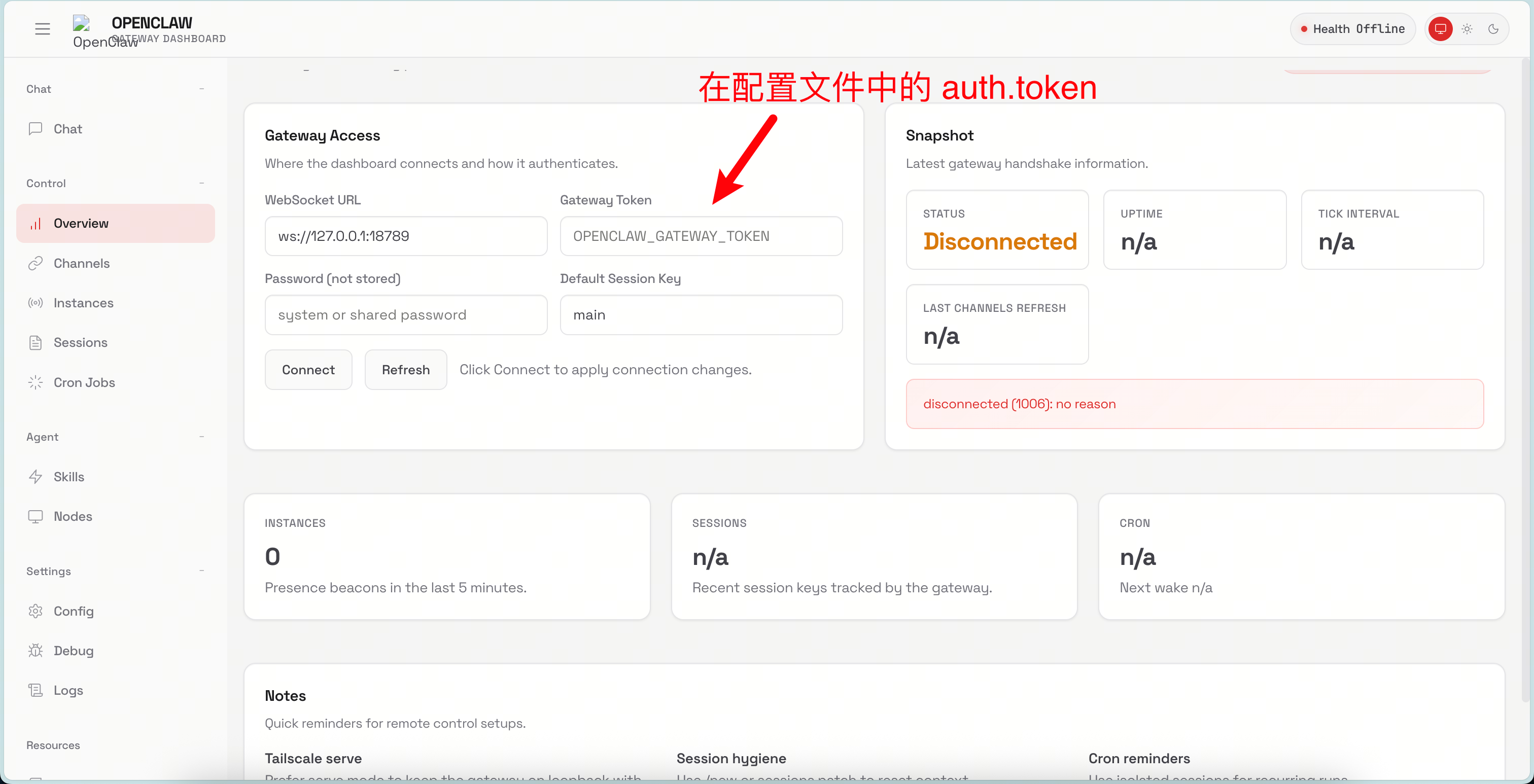

注意:启动是在local中,如果是远程虚拟机,是无法通过远程ip+端口访问;可以修改 ~/.openclaw/openclaw.json 配置文件中的 gateway,实现远程可访问

4. 编辑配置文件

# 对接火山方舟endpoint

{

"meta": {

"lastTouchedVersion": "2026.1.30",

"lastTouchedAt": "2026-02-01T06:57:34.845Z"

},

"wizard": {

"lastRunAt": "2026-02-01T04:46:26.647Z",

"lastRunVersion": "2026.1.30",

"lastRunCommand": "onboard",

"lastRunMode": "local"

},

"auth": {

"profiles": {

"volcengine:default": {

"provider": "volcengine",

"mode": "api_key"

}

}

},

"models": {

"providers": {

"ark": {

"baseUrl": "https://ark.cn-beijing.volces.com/api/v3",

"apiKey": "xxxx",

"api": "openai-completions",

"models": [

{

"id": "glm-4-7-251222",

"name": "glm-4-7-251222",

"reasoning": false,

"input": [

"text"

],

"cost": {

"input": 0,

"output": 0,

"cacheRead": 0,

"cacheWrite": 0

},

"contextWindow": 64000,

"maxTokens": 8192

}

]

}

}

},

"agents": {

"defaults": {

"model": {

"primary": "ark/glm-4-7-251222"

},

"models": {

"ark/glm-4-7-251222": {}

},

"workspace": "/home/wangdefeng.1/.openclaw/workspace",

"compaction": {

"mode": "safeguard"

},

"maxConcurrent": 4,

"subagents": {

"maxConcurrent": 8

}

}

},

"messages": {

"ackReactionScope": "group-mentions"

},

"commands": {

"native": "auto",

"nativeSkills": "auto"

},

"web": {},

"channels": {

},

"gateway": {

"port": 18789,

"mode": "local",

"bind": "loopback",

"auth": {

"mode": "token",

"token": "xxxx"

},

"tailscale": {

"mode": "off",

"resetOnExit": false

}

},

"plugins": {

"entries": {

"feishu": {

"enabled": true

}

},

"installs": {

"feishu": {

"source": "npm",

"spec": "@m1heng-clawd/feishu",

"installPath": "/home/wangdefeng.1/.openclaw/extensions/feishu",

"version": "0.1.4",

"installedAt": "2026-02-01T06:53:27.108Z"

}

}

}

}

# 对接火山引擎个人coding plan

{

"meta": {

"lastTouchedVersion": "2026.1.30",

"lastTouchedAt": "2026-02-01T05:22:08.237Z"

},

"wizard": {

"lastRunAt": "2026-02-01T04:46:26.647Z",

"lastRunVersion": "2026.1.30",

"lastRunCommand": "onboard",

"lastRunMode": "local"

},

"auth": {

"profiles": {

"volcengine:default": {

"provider": "volcengine",

"mode": "api_key"

}

}

},

"models": {

"providers": {

"volcengine": {

"baseUrl": "https://ark.cn-beijing.volces.com/api/coding/v3",

"apiKey": "xxxxx",

"api": "openai-completions",

"models": [

{

"id": "ark-code-latest",

"name": "ark-code-latest"

}

]

}

}

},

"agents": {

"defaults": {

"model": {

"primary": "volcengine/ark-code-latest"

},

"models": {

"volcengine/ark-code-latest": {

"alias": "volcengine"

}

},

"workspace": "/home/wangdefeng.1/.openclaw/workspace",

"compaction": {

"mode": "safeguard"

},

"maxConcurrent": 4,

"subagents": {

"maxConcurrent": 8

}

}

},

"messages": {

"ackReactionScope": "group-mentions"

},

"commands": {

"native": "auto",

"nativeSkills": "auto"

},

"web": {},

"gateway": {

"port": 18789,

"mode": "local",

"bind": "loopback",

"auth": {

"mode": "token",

"token": "xxx"

},

"tailscale": {

"mode": "off",

"resetOnExit": false

}

}

}

重启

openclaw gateway stop

openclaw gateway start

5. 对接飞书

https://www.cnblogs.com/catchadmin/p/19545341#%E5%AF%B9%E6%8E%A5%E9%A3%9E%E4%B9%A6

# 插件开源地址 https://github.com/m1heng/clawdbot-feishu

openclaw plugins install @m1heng-clawd/feishu

openclaw config set channels.feishu.appId "飞书 app id"

openclaw config set channels.feishu.appSecret "飞书 app secret"

openclaw config set channels.feishu.enabled true